Meta ‘will label AI-generated images’: Nick Clegg

by Dr. Piyush Mathur

In an essay published on Meta’s website on February 6, Nick Clegg, President of Global Affairs at Meta, announced his company’s plan to begin labelling Artificial Intelligence (AI)-generated contents—including audio and video—that get posted on its social media platforms of Facebook, Instagram, and Threads; he also provided a link to this essay in a LinkedIn post he made soon after.

While both the essay’s title and the LinkedIn post somehow mention AI-generated ‘images’, the essay itself is clear that AI-generated audio and video contents are part of the purview. At the same time, there is no mention in either the essay or the LinkedIn post of anything related to AI-generated text or AI-enabled plagiarism at scale (which has seen many forms of manifestations across the Internet).

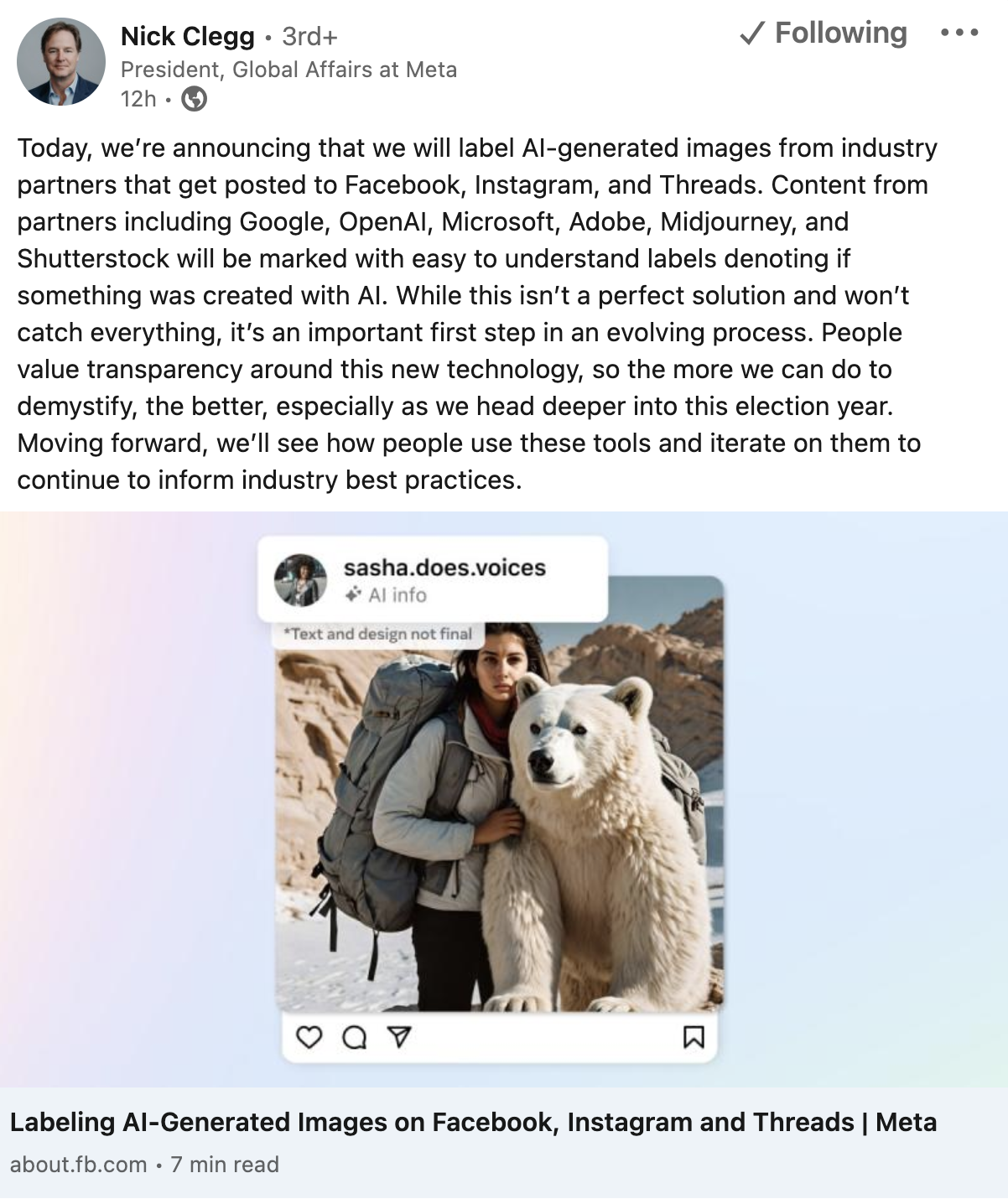

Screenshot of Nick Clegg’s LinkedIn post (February 7, 2024) reporting Meta’s announcement regarding labelling of AI-generated images across its social media platforms

(Screenshot credit: Thoughtfox)

At any rate, up until now, Meta had restricted itself to putting the label of ‘Imagined with AI’ to ‘photorealistic content’ created with its own AI tools; hereon out, the company would attempt to do the same with external AI-generated images (as well as audio and video contents) posted within its environs, the essay claims. Clegg stresses this undertaking as a work-in-progress whose results would begin to show up as the year advances. ‘We’re building this capability now, and in the coming months we’ll start applying labels in all languages supported by each app,’ he notes.

But Clegg’s announcement also hints at the inevitability of inadequacies and imperfections in Meta’s AI-labelling endeavour. On the one hand, Meta would have to work ‘with industry partners on common technical standards for identifying AI content’—a process that would retain plenty of slippages; on the other hand, in real time, the labelling will happen only when Meta’s system would be able to detect, in user-submitted contents, ‘indicators that they are AI-generated.’

Users of Meta’s applications would thus have to be prepared for oversights and instances of mislabelling—and if the persistent problem of fake accounts on Facebook is any indication, then this new AI-labelling regime would more than likely disappoint often.

Meanwhile, this corporate-level self-monitoring at scale of AI-generated audio and video contents is promised to have its counterpart at the level of the average user of Meta applications. Clegg notes that Meta is also ‘adding a feature for people to disclose when they share AI-generated video or audio so we can add a label to it,’ stressing that the company will ‘require people to use this disclosure and label tool when they post organic content with a photorealistic video or realistic-sounding audio that was digitally created or altered’.

People may also be penalized or their AI-mixed content may be imposed a relatively alarming label if it ‘creates a particularly high risk’ of material deception for the masses, the essay stresses.

In the LinkedIn post made after the publication of his essay, Clegg underscored the fact that Meta is not offering ‘a perfect solution’—but is only taking ‘an important first step in an evolving process.’ Whichever way one looks at it, the global importance of that first step certainly can’t be underestimated—given that Clegg’s essay also mentions that ‘a number of important elections’ are to take place in 2025.

What neither Clegg’s essay nor his LinkedIn post note, though, is that Marc Zuckerberg—Meta’s Co-founder, Executive Chairman and Chief Executive Officer—was upbraided by US senators as recently as January 31 for failing to implement enough safeguards to protect children from online threats on his company’s applications.

Writing for USA Today, Bailey Schulz had then reported how the senators had released ‘internal documents from Meta’ indicating that Zuckerberg had rejected requests in 2021 ‘to add dozens of employees to focus on children’s well-being and safety.’ Referring to those documents, Sen. Richard Blumenthal, a Democrat from Connecticut, had made the following comment (as Schulz highlights): ‘(This) is the reason why we can no longer trust Meta, and, frankly, any of the other social media to in fact grade their own homework.’

It remains to be seen how social-media platforms and the Internet industry generally would grade itself on AI-labelling.